Now, we are also seeing a growing marketplace for stolen ChatGPT premium accounts on the dark web – which has potential major implications for personal and corporate privacy. ChatGPT accounts store the recent queries of the account’s owner. So when cybercriminals steal existing accounts, they gain access to the queries from the account’s original owner. This can include personal information, details about corporate products and processes, and more.

Sergey Shykevich, Threat Intelligence Group – Manager, Check Point Research

Since December 2022, Check Point Research (CPR) has raised concerns about ChatGPT’s implications for cybersecurity. Now, CPR also warns that there is an increase in the trade of stolen ChatGPT Premium accounts, which enable cyber criminals to get around OpenAI’s geofencing restrictions and get unlimited access to ChatGPT.

The market of account takeovers (ATOs), stolen accounts to different online services, is one of the most flourishing markets in the hacking underground and in the dark web. Traditionally this market’s focus was on stolen financial services accounts (banks, online payment systems, etc.), social media, online dating websites, emails, and more.

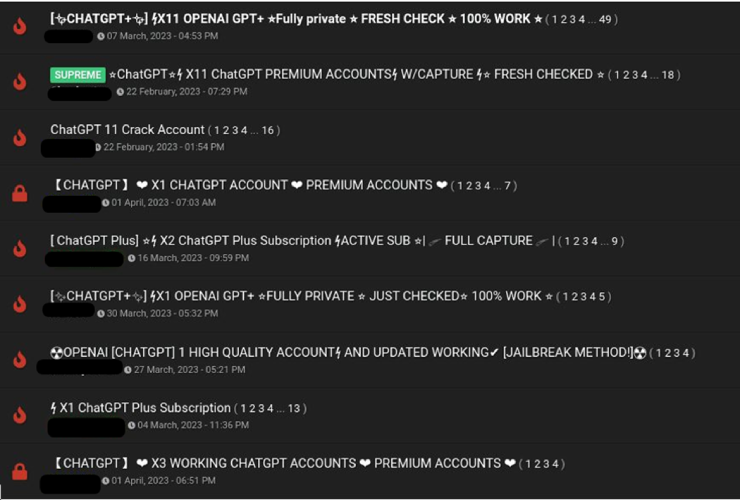

Since March 2023, CPR sees an increase in discussion and trade of stolen ChatGPT accounts, with a focus on Premium accounts:

1. Leak and free publication of credentials to ChatGPT accounts

2. Trade of premium ChatGPT accounts that were stolen

3. Bruteforcing and Checkers tools for ChatGPT – tools that allow cybercriminals to hack into ChatGPT accounts by running huge lists of email addresses and passwords, trying to guess the right combination to access existing accounts.

4. ChatGPT Accounts as a Service – dedicated service that offers opening ChatGPT premium accounts, most likely using stolen payment cards.

Why is the market of stolen ChatGPT account on rise and what are the main concerns? As we wrote in previous blogs, ChatGPT imposes geofencing restrictions on accessing its platform from certain countries (including Russia, China and Iran). Recently we highlighted that utilizing the ChatGPT API allows cybercriminals to bypass different restrictions, as well as use of ChatGPT’s premium account.

All this leads to an increasing demand for stolen ChatGPT accounts, especially paid premium accounts. In the dark web underground, where there is a demand – there are smart cybercriminals ready to take advantage of the business opportunity.

Meanwhile, during the last few weeks there have been discussions on ChatGPT’s privacy issues, with Italy banning ChatGPT and Germany considering banning it as well. We highlight another potential privacy risk of this platform. ChatGPT accounts store the recent queries of the account’s owner. So when cybercriminals steal existing accounts, they gain access to the queries from the account’s original owner. This can include in personal information, details about corporate products and processes, and more.

Trade of Stolen Accounts of ChatGPT

Cybercriminals often exploit the fact that users recycle the same password across multiple platforms. Using this knowledge, malicious actors load sets of combinations of emails and passwords into a dedicated software (also known as an account checker) and execute an attack against a specific online platform to identify the sets of credentials that match the login to the platform.

A final account takeover occurs when a malicious actor takes control of an account without the authorization of the account holder.

During the last month, CPR observed an increase in the chatter in underground forums related to leaking or selling compromised ChatGPT premium accounts:

Sergey Shykevich, Threat Intelligence Group Manager, Check Point Research said, “AI is a powerful tool. At Check Point Software, we use AI in our ThreatCloud to detect and block cyber-attacks in real time. Unfortunately, cyber criminals are also early adopters of AI. Since December, CPR has warned that ChatGPT has also implications for cybersecurity. Now, we are also seeing a growing marketplace for stolen ChatGPT premium accounts on the dark web – which has potential major implications for personal and corporate privacy.”

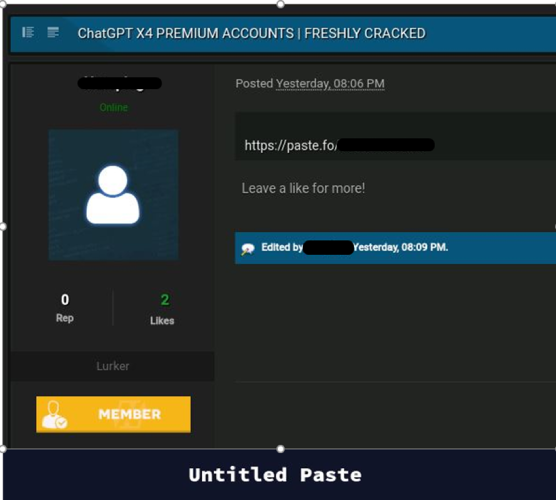

Mostly those stolen accounts are being sold, but some of the actors also share stolen ChatGPT premium accounts for free, to advertise their own services or tools to steal the accounts. In the following example, a cybercriminal shared four stolen premium ChatGPT accounts. The way those accounts were shared and the structure of it, led CPR to conclude that those were stolen using a ChatGPT account checker.

Tools to Hack into ChatGPT Accounts – Account Checker and Configuration Files for Bruteforcing tools

SilverBullet is a web testing suite that allows users to perform requests towards a target web application. It offers a lot of tools to work with the results. This software can be used for scraping and parsing data, automated pen testing, unit testing through selenium and much more. This tool is also frequently used by cybercriminals to conduct credential stuffing and account checking attacks against different websites, and thus steal accounts for online platforms.

As SilverBullet is a configurable suite, to do a checking or brute forcing attack against a certain website requires a “configuration” file that adjusts this process for a specific website and allows cybercriminals to steal accounts of this website in an automated way.

In the specific case, CPR identified cybercriminals offering a configuration file for SilverBullet that allows checking a set of credentials for OpenAI’s platform in an automated way. This enables them to steal accounts on scale. The process is fully automated and can initiate between 50 to 200 checks per minute (CPM). Also, it supports proxy implementation which in many cases allows it to bypass different protections on the websites against such attacks.

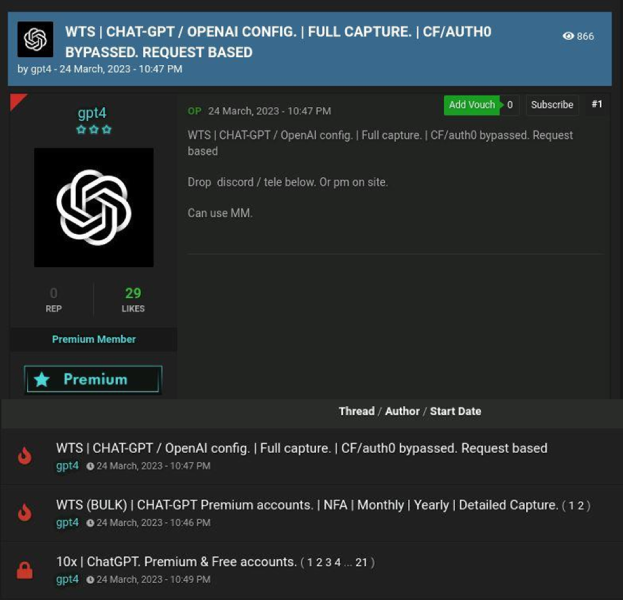

Another cybercriminal who focuses only on abuse and fraud against ChatGPT products, even named himself “gpt4”. In his threads, he offers for sale not only ChatGPT accounts but also a configuration for another automated tool that checks a credential’s validity.

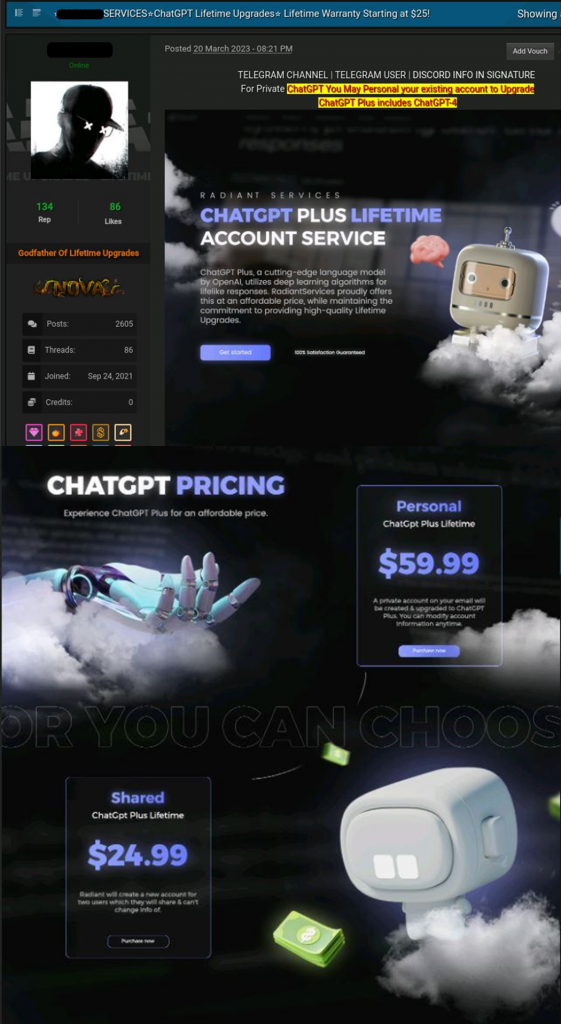

ChatGPT Plus Lifetime Upgrade Service

On March 20th, an English-speaking cybercriminal started advertising a ChatGPT Plus lifetime account service, with 100% satisfaction guaranteed.

The lifetime upgrade of regular ChatGPT Plus account (opened via email provided by the buyer) costs $59.99 (while OpenAI’s original legitimate pricing of this services is $20 per month). However, to reduce the costs, this underground service also offers an option to share access to ChatGPT account with another cybercriminal for $24.99, for a lifetime.

A number of underground users have already left positive feedback for this service, and have vouched for it.

Like in other illicit cases, when the threat actor provides some services for a pricing that is significantly lower the original legitimate one (for another example see our blog on underground travel tickets services) we asses that the payment for the upgrade is done using previously compromised payment cards.